An abstract model for DIP came across my mind. It contains four sections viz; Acquire, Transfer, Display and Interpret. This post is second part of the series. Please refer earlier post (November 2013) for detailed introduction and to know about Acquire block.

Transfer Block:

It is in-between Acquire and Display block. As in Figure 1, input and output of Transfer block is digital data. It acts as a data compressor at the transmitting side and as a data expander at the receiving side (Display Block). This is because sending digital data without compression is a bad idea. Figure 1 describes a scenario. Here a person shoots an image using digital camera and transfers the image to hard disk in JPEG file format. Later it is opened and displayed on the computer screen. Here camera functions as acquire block as well as transmitter-side of Transfer Block. Computer functions as a receiver-side Transfer block as well as display block.

|

| Figure 1 Digital Camera and Computer Interface |

The transfer of digital data between Transfer Sub-blocks may occur through communication channel. This may be through wired (cables) or wireless medium. By nature all communication channels are noisy. It means that the data sent over the channel will be corrupted (1 becomes 0 or 0 becomes 1). A channel is considered good, if out of one million bits, one bit goes corrupt (1 out of 1000000). Various measures are taken to minimize or eliminate the impact of noise. Adding extra bits to detect as well as to correct the corrupt bits is one such measure. In CD and DVD Reed-Solomon Codes are extensively used. For further information search Google with the keyword “Channel Coding.” One may wonder when CD becomes a communication channel. Normally transfer of data from point 'A' to 'B' takes place via a channel and takes finite time (order of milliseconds). If the transfer of data takes near-infinite time then it can be considered as stored. Thus from this view point, transmission of data and storage (in CD or DVD) are functionally same.

Why compression?

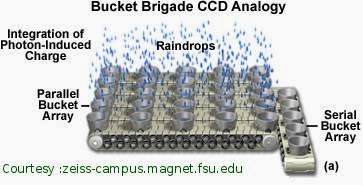

The photons are converted into charge by image sensor. All the charges are read-out and they form a electrical signal. This analog signal is sampled and quantized to produce digital signal. Because of spatial sampling, resultant data is voluminous. Thus each image sensor's photon accumulation is represented in a pixel as a digital value.

Image is made up of rows and columns of pixels. The row and columns of data can be represented in matrix form. Programmers consider image as an array. Each pixel may require one bit to multi-bytes to represent digital data. A pixel from two coloured image (say black and white) requires only one bit to represent. A gray scale image uses 8 bits to represent shades of gray. Black and White TV images falls under this (Gray image) category. Colour image is composed of red, green and blue colour. Each colour shade requires 8 bits. Thus 24 bits (3 bytes) are required for each pixel. A HDTV size frame (image) possesses 1920 x 1080 pixels and requires 6075 KB (1920 x 1080 x 3) size storage. A one minute video requires 8900 MB (6075 KB*25*60). Thus half a minute video will gobble one DVD. It requires 170 DVDs for single movie. One may wonder, how a entire Hollywood movie (nearly 90 to 100 minutes) is put inside a DVD. The answer is compression. The main objective of this example is to make ourselves to realize the mammoth data size of image.

Solution: Remove Redundancy

There is a high amount of correlation exists between pixels in continuous tone images (typical image from digital camera). Thus one can guess a pixel value by knowing the values of neighbouring pixels. Put in another way the difference between a pixel and its neighbours will be very minimum. Engineers exploit this feature to compress a entire Hollywood movie into a DVD.

Redundancy can be classified into interpixel redundancy, temporal redundancy and psychovisual redundancy. Temporal (time) redundancy exists in video only and not in image. Our eyes are very sensitive to gray scale variation than colour variation. This is an example for psychovisual redundancy. By reducing redundancy high compression can be achieved. Transform coding converts the spatial domain signals (image) into spatial frequency domain signals. In the spatial frequency domain, first few coefficients contain large amplitude (value) and rest of the coefficients contains very small amplitude. In Figure 2, bar height represents the value. White colour bars represent the pixels and pink colour bars represent DCT coefficients. The real compression occurs by proper quantization of coefficient amplitude. The low frequency components i.e. first few coefficients are mildly quantized. The high-frequency coefficients i.e. rest of coefficients is severely quantized and outcome reaches near zero value. High frequency signals are highly attenuated (suppressed) but not eliminated. The feel of image crispness arises due to the presence of high spatial frequency components. Once high frequency signals are removed from an image become blurred. In JPEG, colour images are mapped into luminance and two chrominance layers (YCbCr) and Cb and Cr layers (psychovisual) are highly quantized to achieve high compression.

|

| Figure 2 Spatial domain and Spatial Frequency domain. Courtesy [1] hdtvprimer.com |

The quantized coefficients are coded using Variable Length Code (VLC) and then sent to receiver or put into storage device. In the VLC, highly occurring code is alloted with fewer bits and rarely occurring codes are alloted with more number of bits. Very good example for VLC is Morse code. Well known Save Our Souls (SOS) signal is represented as dot dot dot dash dash dash dot dot dot (...---...). In English language S and O are frequently occurring so they given shorter code. Less occurring letters like X, U will have longer code. Huffman code is a VLC, that provides very good compression.

In the receiver side VLC are decoded. The reverse operation of quantization occurs and transformed coefficients are again reconverted into spatial signals to produce the reconstructed image. Severity of quantization and file size are directly proportional. Image quality and quantization severity are indirectly proportional. The quantization makes an irrecoverable loss of signal i.e. it is impossible to recover original signal from quantized signal. For our eyes compressed JPEG image and the original image are practically indistinguishable.

Compression of images using quantization of spatial frequency coefficients is called lossy compression. This method is not permitted for medical images and scanned legal documents. Thus lossless compression is used. A image with 100 KB file size can be compressed into 5 KB file size using lossy compression. But with lossless compression one can achieve only 35 KB file size. Lossy and lossless compression is possible with JPEG. Advanced version of JPEG is JPEG2000. In the JPEG2000 Wavelet transform is used instead of Discrete Cosine Transform (DCT).

Spatial Domain Compression

The transform coding poorly performs on cartoon images with limited colours and line art images. The exploitation of correlation can be carried out in spatial domain itself. VLC can be used to used to compress this sort of images. But underlying source probability of image is required for efficient compression. To overcome this problem dictionary codes are used. All ZIP compression application use dictionary coding. This coding method was developed by Limpel and Ziv in way back in 1977 and the method was named as LZ77. Next year, LZ78 arrived. Later Welsh modified LZ77 to make it much more efficient. It was named LZW. In 1980 Graphics Image Format (GIF) was introduced in Internet. It extensively used LZW. Few years later people come to know that LZW is a patented technique. This sent jittery among Web developers and users. Programmers came out with alternate image standard called Portable Network Graphics (PNG) to subdue GIF dominance. PNG uses LZ77 technique and patent free. In dictionary coding, encoders search for patterns and then patterns are coded. Longer the patterns better the compression.

Knowledge on Information Theory is required to understand to evaluate various VLC. Information theory is an application of probability theory. What is information? If a man bites a dog then it is news. This is because chance of event occurrence is very low and instills interest to read. Put it in another way information value is very high. (Pl. don't confuse with computer scientist usage of information)

Digital content duplication is a simple affair. So, content creators were forced find some ways and means to curb piracy. Digital Watermarking is one such solution to curb piracy. Here copyright information is stored inside the image. Presence of TV logo on television programmes is a very good example. Invisible watermarking schemes are also available. Steganography is a art of hiding text in images. Functionally digital watermarking and steganogragphy is similar but their objectives are totally different.

Note

The objective of this post to give overview of Transfer block. For more information please Google highlighted phrases.

1. What is exactly atsc [Online]. Available http://www.hdtvprimer.com/issues/what_is_atsc.html