Digital Television

Federal Communications Commission (FCC) of USA established Advanced Television Systems Committee (ATSC) in 1995 to form Digital Television (DTV) standards. In 1996 DTV standard was adopted in USA. This standard was adopted by Canada, South Korea, Argentina and Mexico. As per original plan of FCC no analog broadcast after February 2009 in USA.

One of the variant of DTV is High Definition Television (HDTV). ATSC proposed 18 different DTV formats out of which 12 are SDTV formats and remaining are in HDTV format. A DTV to be qualified as a HDTV should satisfy following five parameters. They are

- Number of active lines per frame (≥720),

- Active pixels per line (≥1280),

- Aspect ratio (16:9),

- Frame rate (≤30), exception permitted

- Pixel shape (square).

Please note DTV with aspect ratio of 16:9 and frame size of 480x704 is available. But it is not HDTV format as it not having required active lines per frame.

The six variant of HDTV format can be broadly classified into two groups based on frame size. First group has 1080x1920 frame size with 24p, 30p (progressive) and 30i (interlaced) frames per second (fps). Second group has 720x1280 frame size with 24p, 30p, 60p fps. Square pixels are widely used in computer screens. Progressive scanning is suitable for slow moving objects and interlaced scanning is suited for fast paced sequences. Minimum 60 frames per seconds are required to compensate its deficiency. DTV is designed to handle 19.39 Mbps only. This bit rate is not sufficient to handle 1080p with 60 fps. DTV’s SDTV formats can be broadly classified into three categories. First one is with 480x640 frame size, 4:3 aspect ratio, square pixel and with 24p, 30p, 30i and 60p fps. This is very similar to the existing analog SDTV format. Remaining two uses 480x704 frame size.

HDTV camcorder

HDTV camcorders were introduced in 2003. A professional camera recorder (camcorder) will have 2/3” inch image sensor with 3 chips, color view finder, and 10x or more zoom lens. In professional camcorders cables connectors are provided in the rear of the camera. The interface standards are IEEE Firewire or High Definition Serial Digital Interface (HDSDI). Coaxial cables are connected and content is sent to monitor or a Video Tape recorder.

A consumer grade camcorder uses 1/6” inch image sensor with single chip and 3.5” LCD screen to view. It weighs around a kg and cost is less. Recorded content will be stored video cassette or hard disk.

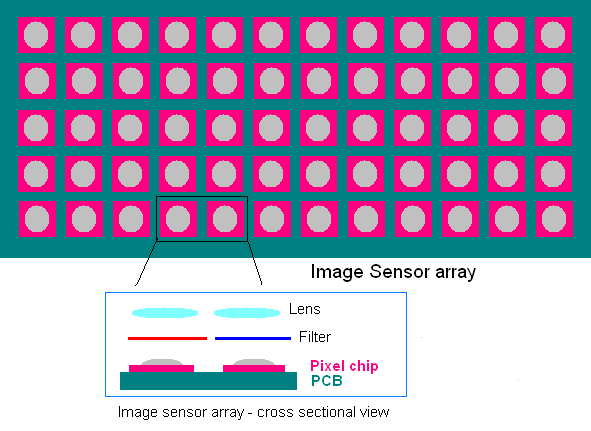

Professional camera like Dalsa origin has an image sensor array of 4096 x 2048. At a rate of 24 fps it generates 420MB/second [2, pg. 165]. It means that a CD will be filled with 90 seconds of data. Image sensor array made up N x M pixels. These pixels may be made up of Charge Coupled Device (CCD) or Complementary Metal Oxide Semiconductor (CMOS). Consumer grade camcorders and Digital camera use CMOS pixels. Following section will compare CCD and CMOS [2].

- CMOS releases less heat and consume 100 times less power than CCD.

- CMOS can work well with low light conditions.

- CMOS is less sensitive and they always have transistor amplifier with each pixel. In CCD there is no requirement of transistor amplifier so more pixel area is devoted to sensitive area. (refer Figure)

- CMOS sensors can be fabricated on a normal silicon production line that is used to fabricate microprocessor and memories. CCD needs a dedicated production line.

- CMOS have the problem of Electrostatic discharge.

Bottom most layer on image sensor array is Printed Circuit Board (PCB). On the top of it pixel chips are mounted. Over that light sensitive sensor is placed (not indicated in the figure, light gray convex shape). Please note entire pixel chip is not devoted to sensor. The incoming light from dichoric reflectors are focused on each pixel chip by a lens. If it is a single chip camera then a primary color filter is placed in-between lens and sensor's sensitive area.

DTV reception

Digital TVs are made to receive DTV broadcast signals. Set-top-box is external tuner system that can receive DTV signals and feed old analog television with analog signals. Color TV took 10 years to achieve five percentage market penetration. DTV in seven years achieved 20 percentage market penetration. Most of the satellite broadcast is in digital format and set-top-box in the receiver end generates analog signals which is suited to analog TVs. Days are not far off to have digital TV all over the world.

Source

- Chuck Gloman and Mark J. Pestacore, “Working with HDV : shoot, edit, and deliver your high definition video,” Focal press, 2007, ISBN 978-0-240-80888-8.

- Paul Wheeler, “High Definition Cinematography,” Focal Press, Second edition 2007. ISBN: 978-0-2405-2036-0

- http://www.scottpeter.pwp.blueyonder.co.uk/new_page_11.htm

- http://www.scottpeter.pwp.blueyonder.co.uk/Vintagetech.htm